A Developer's Guide to MCP: Model, Context, and Protocol

7 min readMCP (Model Context Protocol) is introduced by Anthropic (company behind Claude) in Q4' 2024. Since then it is gaining a lot of popularity. There are many MCP developed by many companies for engineers to increase their productivity. Check the list here.

Do check official documentation of MCP.

If you will search or ask someone to understand "what MCP is?". Response you will get - "MCP is like USB-C for the AI applications".

and my response to this is - "huh!! what it even means?"

Well, in this blog I will take you in journey to understand and learn MCP the way I did.

Prerequisites

1 . Any programming language

2 . Experience of working with AI tools or apps eg: Claude, Cursor, ChatGPT, etc.

My first question was - Why we even need MCP?

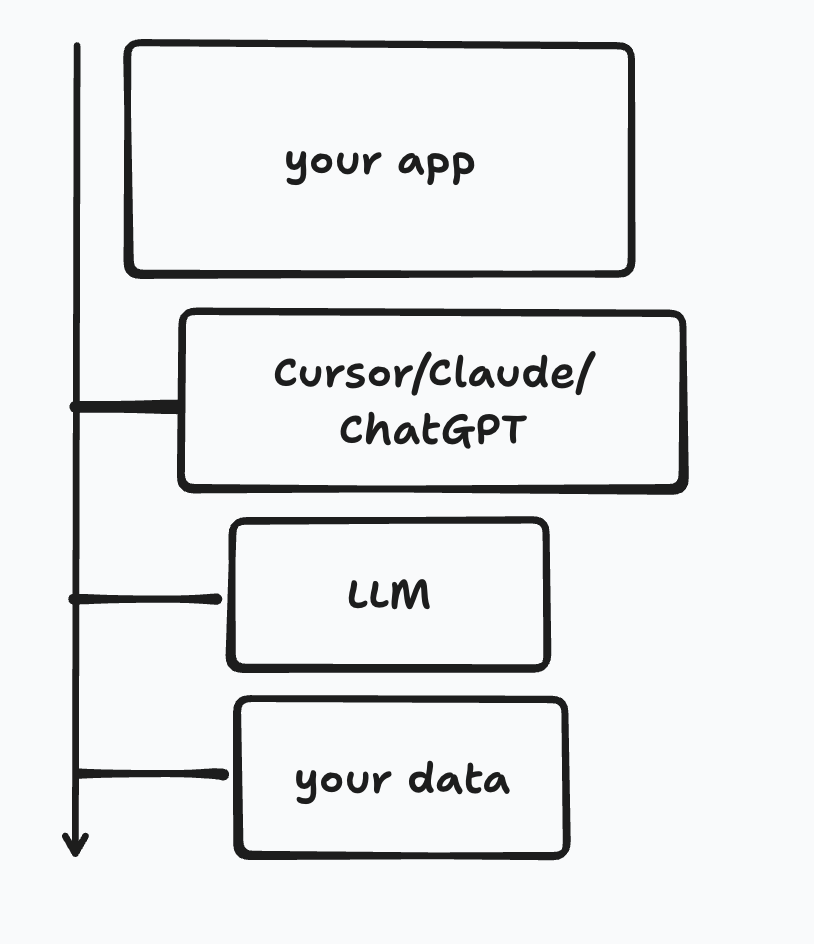

To understand this, we need to have an app which is using an AI model.Your AI app's architecture at a high level will look like this:

Example: Imagine an app that helps you while coding. You’re in VSCode, you open the Claude extension, and ask: “Share S3 features".

Claude replies with an answer based on its training data.

But here’s the catch: LLMs have a knowledge cutoff. They don’t automatically know the latest AWS updates, and they can’t directly pull from the internet unless you integrate a retrieval system.

Now, what if you also need answers from your own resources — like your company’s database, internal docs, GitHub repos, or Google Drive? That data is not available to the LLM by default.

The solution: instead of building lots of one-off APIs, we use MCP (Model Context Protocol). MCP standardises how your app connects the model to different data sources and tools, so the model can fetch the right information at the right time.

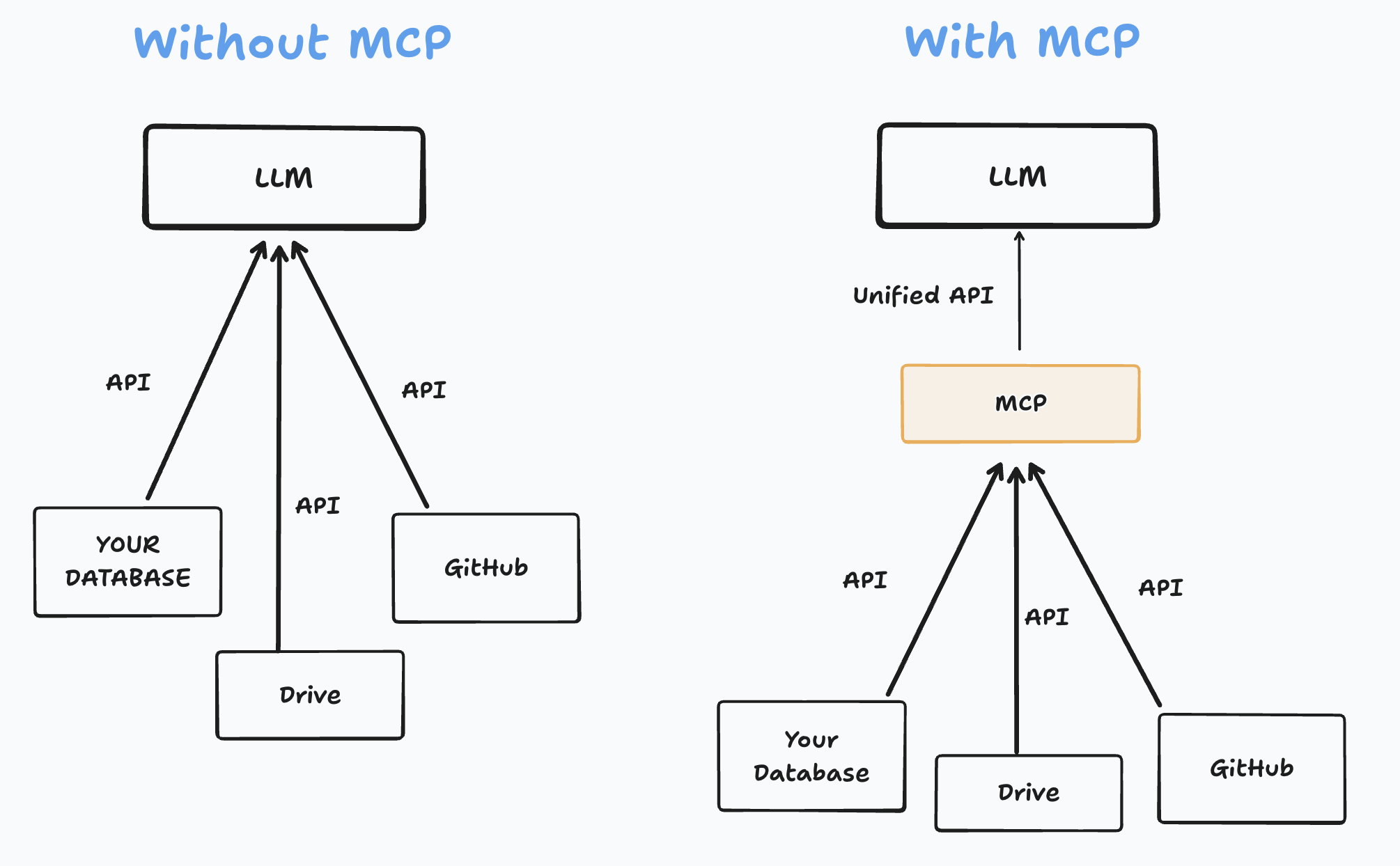

AI app before and after MCP

In the last section, we saw an example of an AI app and its limitation. Let’s see how we will resolve this limitation and how MCP will help here

Without MCP

In the above diagram, you can notice we have different APIs to pull the data to our app. This is not a clean way.

With MCP

In the above diagram, you can notice before and after of MCP in our AI app. MCP will pull all the data from different resources and then send it to our app via a unified api. This is a cleaner approach.

MCP is a clean or standardize way of pulling the data (from multiple resources) to our LLMs or AI application. This is the advantage you will get using MCP

Because of this reason "MCP is like USB-C" is popular.

MCP vs RAG

If you are asking - "how MCP is different from RAG?". Then congratulations, you are asking the right questions.

| RAG | MCP | |

|---|---|---|

| Purpose | Enriches the LLM’s knowledge by injecting external context at query time | Gives the LLM a standardised way to interact with tools, APIs, and data sources. |

| How it works | Retrieves relevant documents → appends them to the prompt → LLM generates a better response. | LLM selects from available tools → MCP executes the action → returns structured results → LLM uses them. |

| Focus | Make LLMs smarter with more up-to-date/contextual information | Make LLMs act by bridging them with actions (e.g. query DB, fetch from GitHub, call an API). |

| Example | Ask “What are the latest S3 features?” → RAG fetches AWS docs → LLM summarises them | Ask “Create an S3 bucket in my dev AWS account” → MCP lets LLM call AWS API through a defined tool → confirms bucket creation. |

| RAG → makes LLMs smarter by adding retrieved info (boosts knowledge) | MCP → makes LLMs do work by giving them structured access to tools + data. (enables actions) |

What is MCP (Model Context Protocol)

So far we got the answer of the following:

1 . why we need MCP?

2 . where it fits in our AI products?

3 . what problem it solves?.

Let's understand What is MCP

1. Model:

If you work in the AI domain or use any AI application, you’ve likely heard the term model. In this context, a model typically refers to an LLM (Large Language Model). These models are trained on vast datasets that include text, code, and sometimes other modalities like images or audio. Their capabilities come from the patterns they learn during training.

There are many well-known models developed by major organizations such as OpenAI, Google, and Meta — eg chatGPT, Gemini, and oLlama etc.

2. Context

Context is a critical component in AI applications and model interactions.

Simply put, context refers to the information the model uses to understand your request. This includes the conversation history, previous prompts, and any additional data you provide. Without proper context, a model may generate incomplete or inaccurate answers.

3. Protocol

If you have worked with web applications, you’re likely familiar with the term protocol. In general, a protocol defines the rules and methods for transferring data between systems.

In the context of AI, protocols such as Model Context Protocol (MCP) are emerging to standardize how clients (AI app) and models exchange information, manage context, and ensure interoperability across different AI systems.

MCP is basically taking your models, maintaining context and using protocol.

Tech Stack for MCP

You can use the following tech to develop MCP. These languages have MCP packages that one can import and start using. We will explore this in our next blog.

1 . Java

2 . Python

3 . Rust

4 . GoLang

and many more - Kotlin, C#, etc.

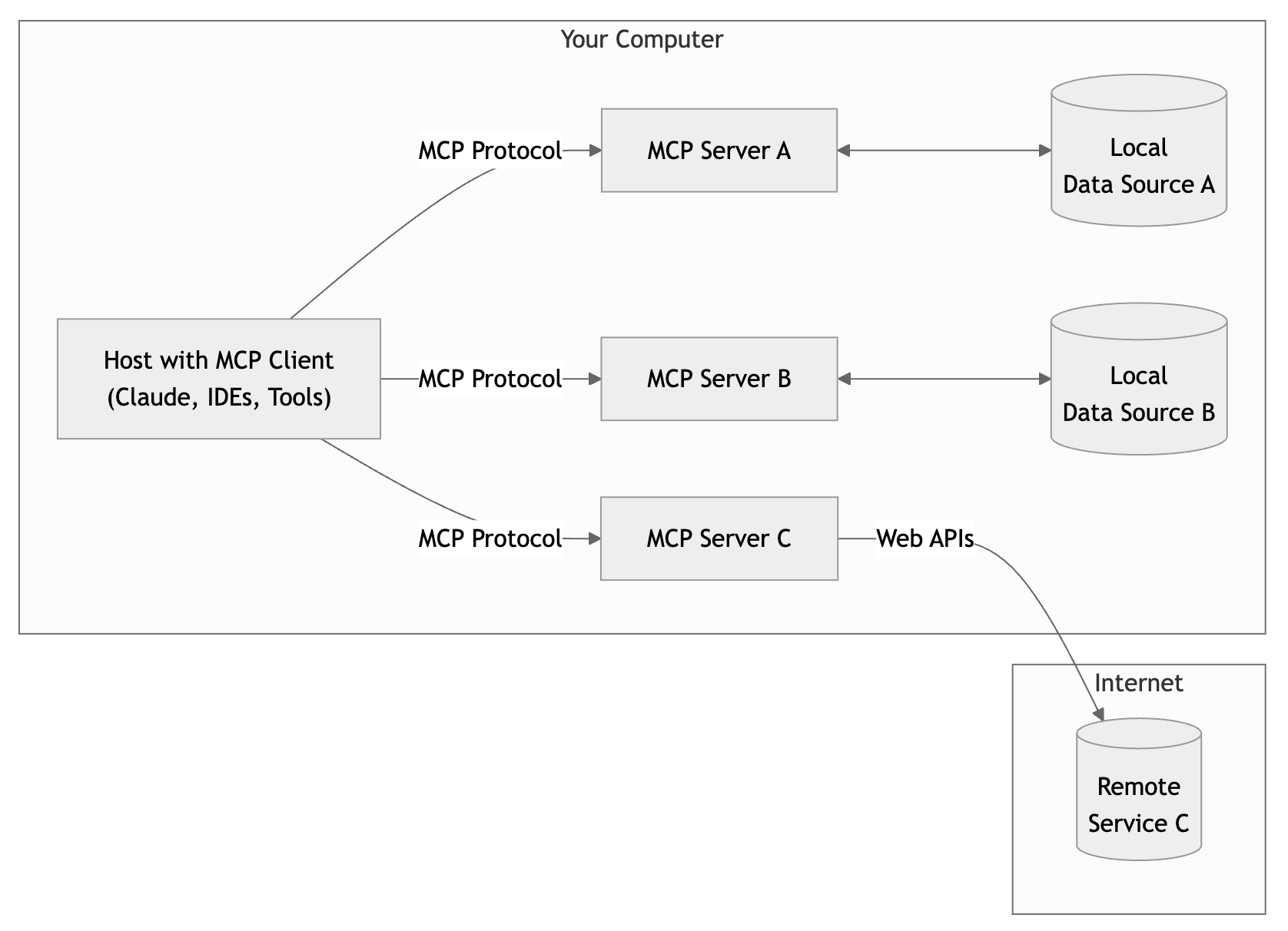

MCP Architecture

Components

In MCP, we have 3 components:

1 . MCP host: The AI application that coordinates and manages one or multiple MCP clients.

2 . MCP Client: A component that maintains a connection to an MCP server and obtains context from an MCP server for the MCP host to use.

3 . MCP server: A program that provides context to MCP clients (user/AI app). This could be at your local or at different machine or cloud

Here is the flow: You (in VSCode) → MCP Host (Claude IDE app) → MCP Client (bridge/connector) → MCP Server (AWS Docs source) → response flows back to Claude in IDE. note: One Host can manage multiple Clients talking to multiple Servers.

Transport Mechanisms

MCP defines how the client and server talk to each other. At the moment, there are two transport options:

1 . Stdio transport: Use Stdio for local, lightweight setups (like connecting your IDE to local docs).

2 . Streamable HTTP transport: Use Streamable HTTP for remote, scalable setups (like connecting multiple clients to a central MCP server in the cloud).

Do check video demo of MCP of example

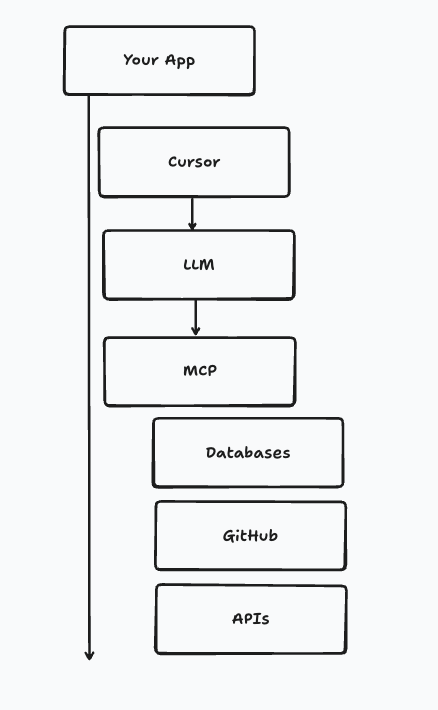

Now, this is how your app will look

Summary

1 . MCP is known as "Model Context Protocol". It has been introduced by Anthropic in Nov 2024

3 . MCP is like USB-C for AI applications. It simplifies the way we pull the local data in our AI applications from multiple sources.

4 . MCP can be built in different language - Java, python, TypeScript, Rust etc.

5 . MCP and RAG is different

6 . MCP bridge the gaps when AI apps need the information feed from local sources, multiple, or one.

7 . Without MCP: LLM only answers from its training and/or prompt context. With MCP: LLM can both think and act by securely calling your tools/data.

Happy Learning!!